On tools and exponentials

by Soham Govande (@sohamgovande)

June 9, 2024 reads

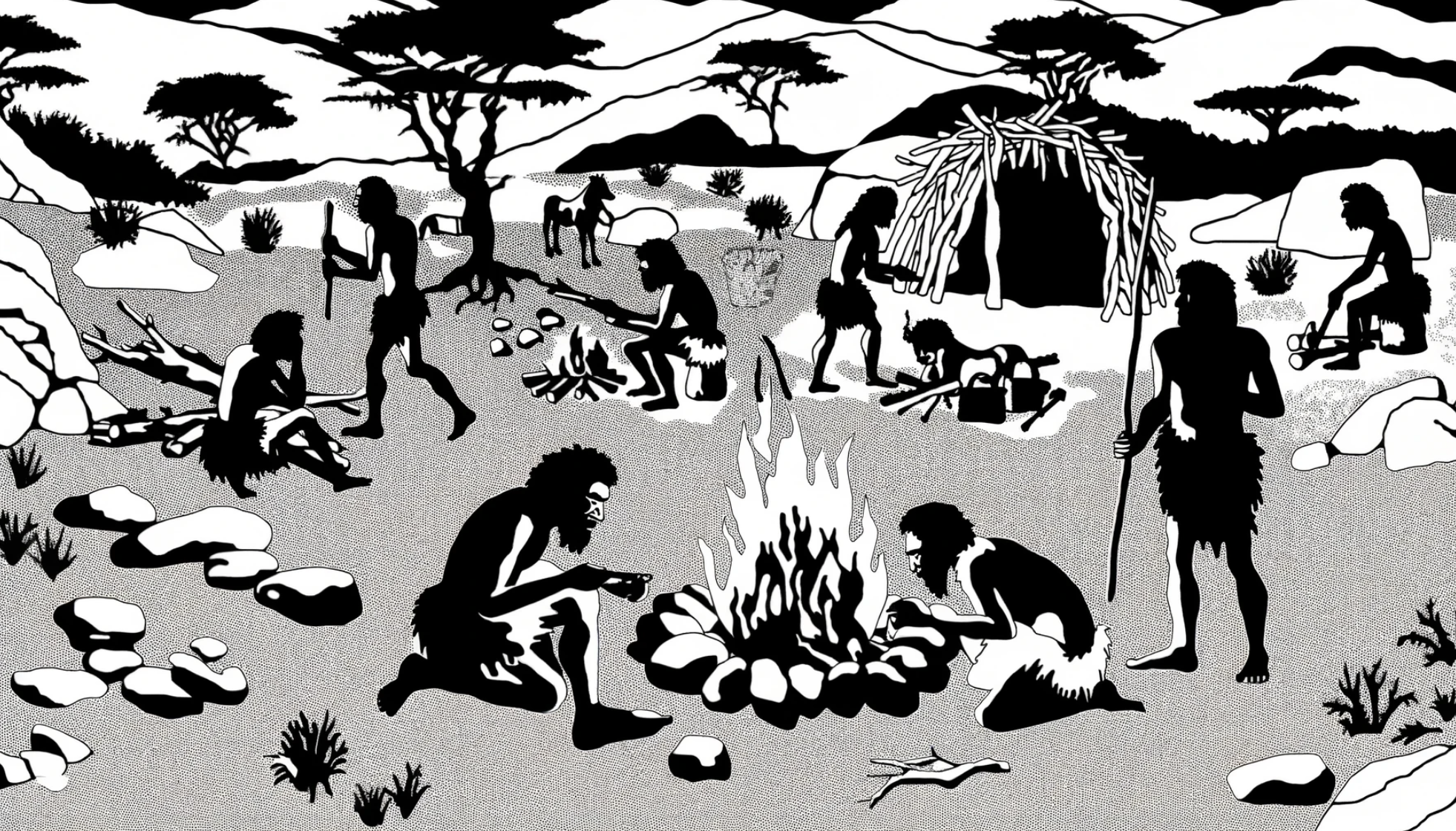

Over human history, we've created more and more powerful tools. The control over fire, our first significant tool, accelerated brain growth. The domestication of plants, our next invention, led to sedentary societies and specialized labor markets. Recently, the discovery of electricity fostered an unprecedented era of industrial innovation.

I've been reading Sapiens, and the most interesting thing Harari discusses is how every new tool is a multiplier on an individual's impact. When we were apes roaming the forests of Africa, there were few practical considerations aside from the constant need to find and collect food. Fire changed everything. Every unit of meat became more nutritious, and the more protein-dense meat someone had access to, the more powerful their brains became. This meant that every unit of time could become much more powerful if spent well. Farming would enable us to act upon this brainpower. Once food was secured, hunter-gatherer bands settled into permanent communities around farms. However, only a few people were needed to farm. The rest would specialize. This was the first time in human history that creators had the resources to freely think and build. The capacity of every individual was now no longer limited by the hours they would need to spend searching for food, but rather, however many hours someone could feasibly spend innovating.

It seems that increasingly better tools will accelerate all future growth, an idea that naturally lends itself to exponential curves. Indeed, by comparing the difference in times between some of the most monumental tools, we can see that the data reflect this.

- 5,000,000 years from the evolution of Chimpanzees to the invention of fire (~1.5 million years ago)

- 1,000,000 years to create the first farms (~10,000 years ago)

- 10,000 years to the discovery of electricity (~200 years ago)

- 100 years to the first computing devices (~50 years ago)

- 50 years to artificial general intelligence (~5 years from now)

The time between breakthrough innovations is exponentially decreasing. With AGI on the horizon, what will the future look like? Will we be surrounded with breakthrough scientific and technological discoveries happening every day, then every minute, and eventually every second? This utopia sounds a fantasy, but we're also notoriously bad at estimating exponentials.

Generalize or Specialize?

I'm not sure what the future will look like, but this perspective has deeply informed the type of person I want to be. A question that I ask myself very often is: Do I generalize or do I specialize? Should I get really good at one particular skill that will be deeply beneficial to the world, or should I be a generalist who is very effective at orchestrating the tools around them? Because the availability and creation of new tools is going to exponentially increase, it seems that to make the most use of them, you ought to be a generalist who is hyper-capable of context switching and adapting to new tools.

Of course, all this is all quite theoretical. More concretely, I spend most of my days thinking about, or working on, startups. Naturally, I wonder what this will mean for my little corner of Palo Alto. One application of this concept is in the skillset distributions of successful founding teams.

The typical YC advice on assembling a founding team has been summed up by two words: "complementary skills." You want someone who builds and someone who sells. Each person does what they are best at, making the team collectively strong across all areas. Mathematically formalizing this is the idea that, given two skills and , one can be excellent at either or , decent at and , but not excellent at and . In vector notation, a person can be at , , or — but not at .

Historically, this distribution has favored specialist founders (i.e., at the basis vectors and ), but disadvantaged people who were not at either extreme. Interestingly, the distribution of successful founders is shifting from these extremes towards generalists who are somewhere in the middle. There is a two-fold explanation for this.

-

First, new tools are a multiplicative factor that increases your leverage across every dimension. For example, if you were pretty nontechnical (we'll say, at along the coding axis), you can use AI tools to ship applications ten times more easily than you previously could. The more dimensions there are, the more nonlinear benefits there are to a multiplier effect across every dimension.

-

The second part is that (current) AI tools are effective at getting you from non-expert status to mid-level expert, but not effective at helping people who are already experts. This means that to make the best use of tools, it helps to be relatively knowledgeable about a lot of different things. Sure, you ought to probably know your particular area of expertise much, much better than others. But not being the smartest person in the world at it is okay — assuming that you have access to the right tools in your belt.